tf-serving 模型部署

- 由于之前一直都是使用pytorch,部署就一直也没去关心tf-serving那一套鬼东西,近期使用了一段时间tf,keras,涉及到部署问题,当然这就离不开serving了,所以就做一个使用总结,供自己后续参考吧,别又忘记了

- 网上资料一大堆,如果你是熟悉这些东西的,没有必要往下看了

- 那就先从一般的 tf模型代码说起吧

简单定义一个tf模型

1 | import os |

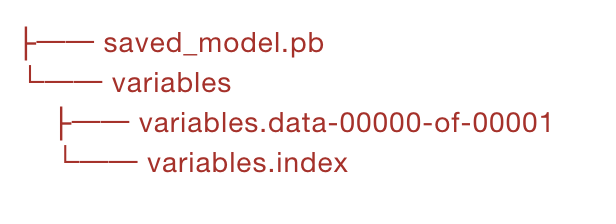

- 保存模型的目录大概是有这几个文件

接下来拉一个TensorFlow-Serving的Docker镜像

- docker pull tensorflow/serving

下载完后,再 cd 到上面输出的“model”目录的上一级目录,运行下面的命令

TESTDATA=”$(pwd)/model”

docker run -t –rm -p 8501:8501 \

-v “$TESTDATA:/models/simple_fc_nn” \

-e MODEL_NAME=simple_fc_nn \

tensorflow/serving

这样就可以把模型serve起来了。其中,端口号可以自己改,simple_fc_nn是我自己起的模型名称,在后面使用REST API来访问TF-Serving服务的时候,会用到这个名称。

通过REST API查看服务状态

这里可以看到,URL里的simple_fc_nn就是在启动TF-Serving服务的时候指定的那个名字。

另外,这里使用的是localhost,所以必须在TF-Serving运行的同一台机器上执行该命令。

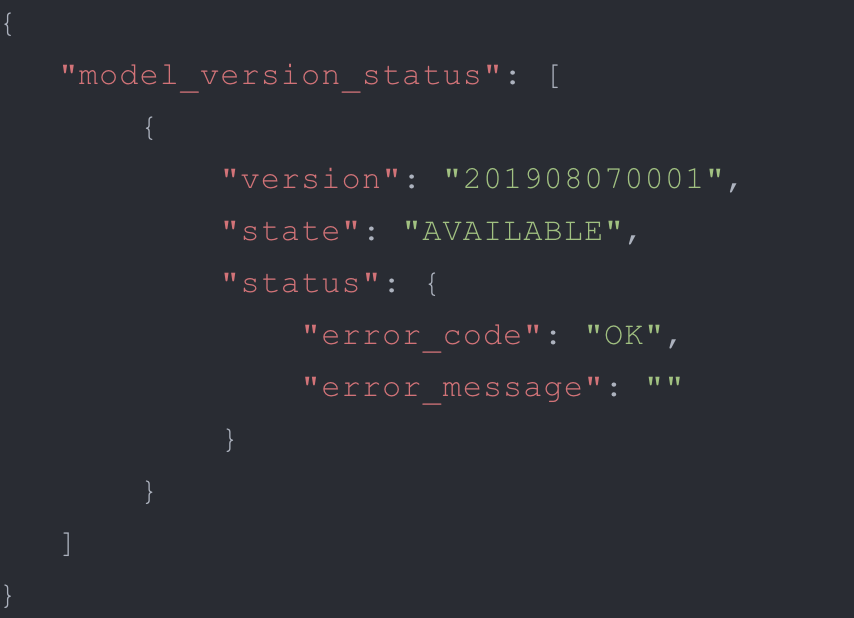

返回:通过REST API查看模型的元数据

通过Apache ab对TF-Serving进行性能测试 post.txt 里放的是输入数据,(转成json的格式)

- ab -n 100000 -c 50 -T ‘Content-Type:application/json’ -p ./post.txt http://localhost:8501/v1/models/simple_fc_nn:predict

keras 模型 转到 tf-serving 可用的pb格式

- 直接看代码吧

1 | def keras_model_2_tf_serving(): |

- 大概就是这么一个样子吧,看懂就👌